Building a Proxmox cluster

Introduction

I haven't posted on this blog for over a year. There are multiple reasons for that, which I might explain someday, but I don't want to go into them now. Except for one thing... I have been keeping myself busy with jodiBooks and stuff I wanted to do for myself. This was one of them.

For years I wanted to build my own proper server setup, but all kinds of "rational" reasons prevented me from doing so: "Having your own server is too expensive", "it's too wasteful with electricity", "it's too much work", "you don't have the time", "it's too much risk". Well, I still agree that all of them are true, but fuck it, I wanted my own servers.

After years of struggling with a -more than decent- custom build home server, I decided to buy some proper equipment. My idea for what I wanted exactly was still really vague. And because I still consider this a semi-hobby project (jodiBooks is my main project), I looked for a cheap used server. After some browsing through classifieds on the Dutch site Tweakers, I found two ads for two servers from the same seller and decided to buy them both and see what would happen.

That was 3 months ago and, man, I learned a lot in this short period. This has been a hard and frustrating, but ultimately satifying ride. And I am glad I finally have some time to write everything down (🤞please don't crash now and have me start from scratch🤞).

On my website I have written down all the nitty-gritty details of how to build and configure these servers. Here I want to tell you more about the background of the project, like why I did it and what I learned.

History

Ever since my dad bought his first PC I have been intrigued by computers. I can still remember that I wanted to upgrade the RAM of one of our first computers to 64 MB! Omg, I almost had to beg my dad to do it. He was so afraid I would ruin the computer that I could only do it if someone who had done it before was with me. Luckily, or off course, everything went ok and later my brother and I were allowed to add a graphics card. That was so cool. It sounds weird now, but instantly every game looked so much better. It gave me a feeling of power: look what I can do, I can make things better.

Later when I went to university -15 years ago- I saw all this network equipment in the university buildings and it gripped me. I can't explain exactly what it is, but I like flashing lights, knowing the flashing means something. There is actual "stuff" going through those cables and every flicker of a LED shows someone is sending or receiving data. It's sort of magical.

In that same period I got my own laptop and through study loans and some work I got the funds to buy a PC. I knew I wasn't going to build a full network with all those lights from scratch, that was way above my understanding, so I just build a PC for myself. Over the years however, this PC morphed into a server. It changed so much that the latest incarnation looked nothing like the initial PC. The processor, graphics card, hard disks, mainboard, monitor and chassis changed. It even got watercooling added.

After graduating and getting a job, I finally had the money to do things properly. Through the years I experimented with different chassis, operating systems, water cooling, virtualization and more. That was until we started jodiBooks and there was no time left to "play around". Other things were always more important and taking risks with serving our applications and tools was not a smart thing to do.

So there we were, a man in crisis who didn't allow himself a moment of play. Until a light started burning (or flickering) again and I decided to build the server(s) I always wanted. I knew it is always frustrating and it costs me days of Googling, but it is always so satisfying to see those lights flickering, knowing I build it.

What I wanted to do

I wanted to build a server that was capable of storing lots of data safely and be powerful enough to run a lot of virtual machines. I had read an article on web3 and wanted to build a server that could be used to run those applications on.

So I made a list of things this server (or servers) should do:

- Store lots of data

- Run virtual machines

- Do network routing

- Automate lots of stuff for jodiBooks

- Let me fiddle with crypto/web3

Hardware selection

My existing (home) server -the one that was the pinnacle of all my previous work- had a Supermicro X9SCM mainboard, Xeon E3-1230 v2 CPU and 32 GB of ECC RAM. I also installed an IBM Serveraid M1115 HBA card which has a LSI SAS2008 chip. Back when I build it this was one of the most recommended HBA chips. Together with 4x 2TB and 43x 3TB disks, it was an amazing machine. Especially the chassis; a Fractal design node 804. It has lots of space, is very quiet and its fan filters catch a lot of dust.

Although this server has been of great service and has helped me learn so much, it just didn't have enough RAM to do this seriously and the disks were running on their last legs. It was running some virtual machines for jodiBooks in VirtualBox, but I didn't dare to store any data on it or host a website or production-like application on it. After all those years it just felt too unreliable.

Normally I would have started with a plan, make an Excel sheet and look for hardware that fits and wouldn't be too expensive. This time however I just listed all available second hand servers and bought the two that were most convenient (we happened to be in the neighborhood). So I bought an old Dell T320 and HP ML350p. I than upgraded those by adding RAM and in the case of the ML350 I switched out the CPU's.

Because I hadn't properly planned, but mainly because my requirements changed, more hardware was needed. I then found that on Ebay I could buy server hardware really cheap. Omg, why didn't I think of that earlier. Anyway, I bought SSD's, HDD's, a new HBA (see "OS filesystem"), changed the CPU's of the ML350 again and even more -I don't know anymore what exactly. Anyway it culminated in these two servers.

HP(E) branded hardware requirement

HP, now HPE, is notorious for being very picky about the hardware you install. If it is not HP(E) labeled/branded, the server doesn't recognize the sensor data and assumes the worst. This results in very high fan speeds and thus an unbearable amount of noise. So in the HP server I had to install an HP branded HBA: the HP H220.

| Server | Type | CPU | Cores / threads | RAM |

|---|---|---|---|---|

| 1 | HP ML350p gen 8 | 2x Xeon E5-2650 v2 | 16/32 | 112 GB |

| 2 | Dell T320 gen 12 | 1x Xeon E5-2420 v2 | 6/12 | 64 GB |

| Server | OS disk size | OS disk config | Data disk size | Data disk config |

|---|---|---|---|---|

| 1 | ~800 GB | SSD - RAID-Z2 | - | - |

| 2 | ~500 GB | SSD - ZFS mirror | 12.3 TB | HDD - RAID-Z2 + hot spare |

| Server | HBA type | HBA chip | HBA firmware |

|---|---|---|---|

| 1 | HP H220 | ~800 GB | HP v13 hba mode* |

| 2 | Dell Perc H310 | LSI 9205-8i | LSI IT mode |

Hypervisor selection

The most difficult decision thing was choosing the hypervisor. The hypervisor is the main software that runs on the server and allow for running the virtual machines. I knew ESXi from my first dabbles into home servers, but later I ditched it in favor of using Ubuntu (Linux) with VirtualBox. This is not the way you want to run virtual machines in a production environment, so I tried multiple hypervisors and ultimately settled on using Proxmox.

Why Proxmox? Well, as said I used ESXi before. I think the GUI is amazing, but as a free product the functionality is too limited. I really want to make backups and move VM's between servers, which is not possible with the free license. So I searched for free, open-source alternatives and found KVM with Cockpit, Proxmox, OpenNebula, XEN and oVirt.

In short (TLDR)

- Price: free

- Proxmox is free and open-source as opposed to ESXi (VMWare).

- Technology: KVM / QEMU

- Proxmox is based on KVM which is build into the Linux kernel.

- Big players (AWS) are migrating from XEN, the "other" open-source option, to KVM.

- I couldn't find even the most basic Xen commands. So I couldn't start the GUI after a reboot. This put me off too much to continue.

- Ease of use: GUI

- Proxmox was the only KVM based option that I actually got to a stable working state.

- KVM with the Cockpit GUI (on Ubuntu) is too fragile and limited. Most things still needed to be done through the CLI.

- OpenNebula's implementation is too complicated. Everything needs to be configured manually.

- oVirt needs a dedicated storage machine.

- ESXi so far has the best GUI in my opinion, but the free version is too limited.

I started with Cockpit as I already had Ubuntu installed and KVM is available in the kernel. However, it felt too fragile and I couldn't do a lot of things through the GUI. Also documentation for running KVM/QEMU through the command line is very hard to find. I now see that "The Cockpit Web Console is extendable". Well that would have helped, but nobody mentioned a word about them in the tutorials and manuals I found.

The next try was Proxmox. I didn't want it at first as it is not a stand-alone application, but a full OS. At least, it needs Debian and I had Ubuntu installed. Maybe it works on Ubuntu, but I didn't want to go there. However, when I installed it -which was really easy- I got a VM running in a few minutes. I then tried to configure more and more, converted and imported all my VirtualBox VM's, clustered my servers and fucked up. I was stupid enough to do stuff in the config files (without backing them up).

I was so pissed, I didn't want to have to do anything with Proxmox, that I tried several other hypervisors. The first was Xen server (XCP-ng) with Xen Orchestra. I had some trouble with this too. The GUI (Xen Orchestra) runs as a VM in Xen server. After a reboot this VM wasn't started so I couldn't access it to start it... Get it? Also I couldn't find how to start a VM through the command line. Seriously, this should be so straight-forward, yet I couldn't find that command. Also, on XEN I couldn't get a VM with Golem to work. Which was to be expected as they wrote: "We do not support XEN hypervisor".

The next: OpenNebula. They claimed to be superior to Proxmox, although they used the same technology (KVM). The first thing: installation is way harder than Proxmox or Xen. Second, when you finally have it in a running state, the amount of configuration options is enormous. I just drowned in them, it was to frickin' huge. Thinking back OpenNebula has a different use case (running your own cloud) and so it's logical it wasn't for me.

The last one I tried was oVirt. Installation again was a breeze, but in the initial configuration there was a hickup: oVirt expects you to have a centralized storage node for your VM disks. That was a bit above my station. So in the end I did go back to Proxmox with this warning printed in big font and flashing in my mind:

Always make backups

Proxmox config is very delicate. Almost all settings can be configured through the GUI, but sometimes you might have to dig into config files with the CLI. ALWAYS make a backup before doing so! I learned the hard way that messing up only one file (especially related to the cluster) can mean a full reinstall of that cluster!

Putting it all together

OS filesystem

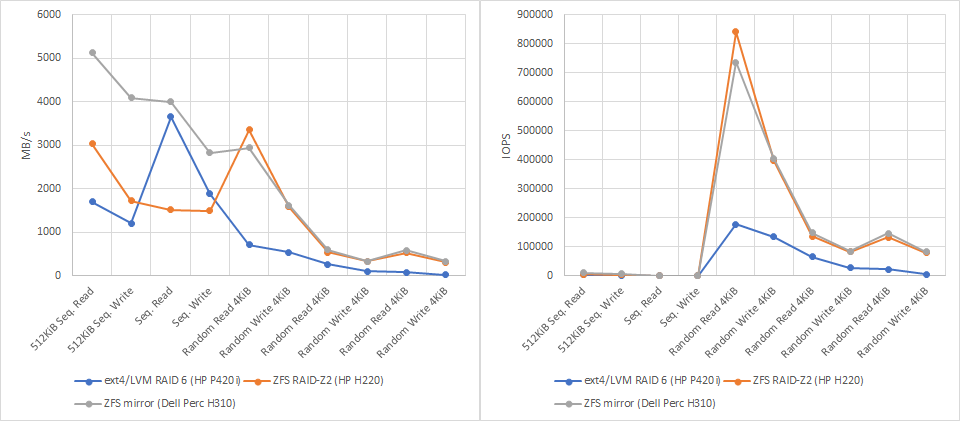

First I had to install Proxmox on both servers. You can read how that works in How to install and configure Proxmox. At first I hooked up the 6 HP SSD's I bought of Ebay to the P420i RAID card in the ML350p server. However, the performance wasn't what I expected. I read somewhere (but forgot to save the source) that RAID 6 is hard for older RAID cards. That made me decide to buy an HBA and use a software RAID solution. Proxmox has ZFS enabled and with those beefy CPU's, ZFS RAID-Z2 should be no problem.

I don't know how to benchmark this properly, but I found a script on StackExchange that should at least give me some relative numbers -relative between hardware RAID6 and software RAID-Z2. The results when using a test size of 1024MB are shown in the table below.

| File system type | Card type | Unit | 512KiB Seq. Read | 512KiB Seq. Write | Seq. Read | Seq. Write | Random Read 4KiB | Random Write 4KiB | Random Read 4KiB | Random Write 4KiB | Random Read 4KiB | Random Write 4KiB |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Q=1 T=1 | Q=1 T=1 | Q=32 T=1 | Q=32 T=1 | Q=8 T=8 | Q=8 T=8 | Q=32 T=1 | Q=32 T=1 | Q=1 T=1 | Q=1 T=1 | |||

| ext4/LVM RAID 6 | HP P420i | MB/s | 1706 | 1205 | 3653 | 1892 | 711 | 538 | 262 | 112 | 88 | 21 |

| IOPS | 3333 | 2442 | 111 | 57 | 177834 | 134528 | 65746 | 28170 | 22033 | 5383 | ||

| ZFS RAID-Z2 | HP H220 | MB/s | 3034 | 1724 | 1515 | 1491 | 3361 | 1587 | 547 | 331 | 535 | 316 |

| IOPS | 5925 | 3368 | 46 | 45 | 840483 | 396798 | 136989 | 82914 | 133856 | 79226 |

I have no clue what these numbers exactly tell me. I can see that it seems that software RAID is faster in my case. So I'm glad I went that route. Here are some graphs to show the same numbers graphically. I also added the test results for my second server that has two consumer SSD's Crucial MX500 The first two show the results of the script when using 1024MB as test size.

The next two show the same thing, but this time with 2048MB as test size.

Flash HBA

Most HBA cards you can buy are configured with IR firmware by default. In this mode the card will take care of the RAID functionality (like the P420i in the benchmarks above). I didn't want that, so I had to (cross)flash the Dell card to IT mode. The procedure is described in How to flash a Dell Perc H310 card.

For the HP server I bought an HBA card, so I thought that one didn't have to be flashed. Well, unfortunately the latest firmware (version 15) contains either a bug or an unwanted change. It made the fans "idle" at 30%, where they make rather a lot of noise. After flashing down to version 13 the fans are back at 8% idle, which is basically silent. The procedure is described in How to flash an HP H220 card.

Disks

For storage I bought a batch of used 3TB SAS disks. They were advertised as used, but relatively short. Most of them indeed had only run for ~7000-8000 hours (a year). There is a lot of discussion going on about the perceived safety of using RAID-5. Search for "RAID 5 is dead" and decide for yourself. I myself lost almost all my data because a RAID-5 array failed, so in my new server I went for RAID-Z2 (RAID-6) with a hot spare). I also have a few backup drives on the shelve.

Installing and configuring Proxmox

The full how to (How to install and configure Proxmox) can again be found on my website. Here I just want to say something about the amount of time it normally costs (me) to get something configured or fixed. You really need to dig into the forums, documentation and in general scower the web. In the end you get something working, but not after going on multiple tangents. I myself don't have an official education in this field, so I have to Google all the terminology and basic concepts. This makes a statement like "just do this or that to fix your problem" a quest, as I have to learn what "this" and "that" means, but also how I have actually perform the actions described.

Installing and configuring TrueNas

Proxmox supports ZFS natively, so I could have used the node to do all file-sharing. However, I really like the GUI of TrueNas and the way all these file-sharing services are taken care of by TrueNas. For me this works so much better than having to piddle with configuration files. It also does automatic disk checks and warns me when a disk is in need of service. Something you can do manually in Linux, but you have to strike a balance between complete understanding and time you want and can invest into it. For me, this is perfect.

More in How to install TrueNas on Proxmox.

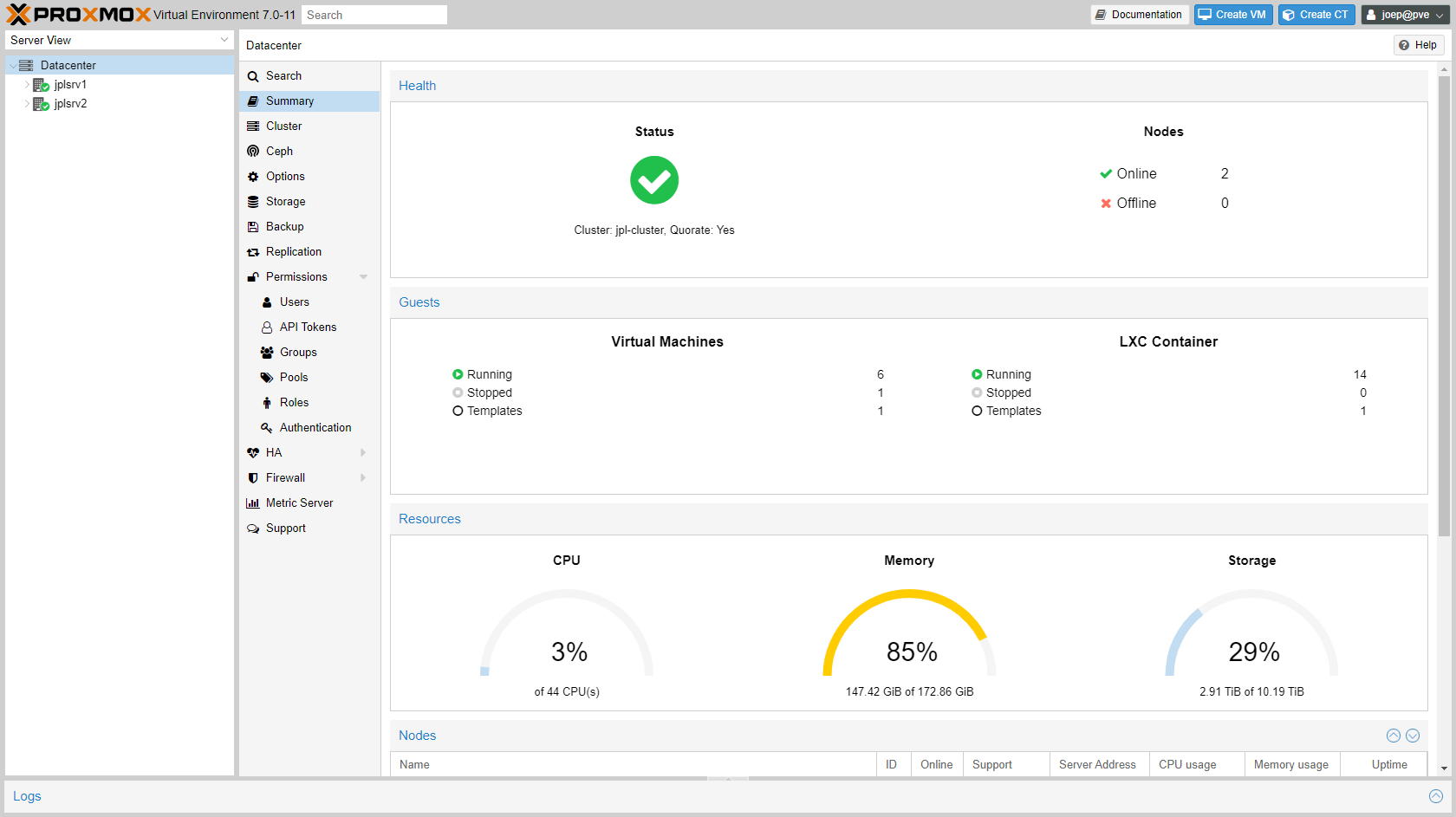

The result: a Proxmox cluster

I now have a server setup that is fully up and running. It currently runs 6 full virtual machines and 14 LXC containers.

The nodes are linked together in a cluster. This makes it possible to:

- Use a single login

- Single username-password

- Single private-public key

- Control the nodes, VM's and containers from a single GUI

- Share configuration (files) between nodes

- Migrate VM's and containers between nodes

All very cool and convenient, but I'm not done yet. On my wishlist are a 10Gbit network between the servers and TrueNas so making backups, migrating virtual machines and storing data will be faster. Also I really want a UPS. But that's all for later. I already spend way more than I budgetted in the beginning😅.