Benchmark Proxmox Virtual Disk settings

Nonetheless as long as you are writing relatively small amount, amounts that fit into memory, it is definitely worth looking at tweaking the disk, well cache, settings.

Every virtual machine needs a virtual harddisk to store at least the operating system. Proxmox offers multiple options to virtualize this boot disk in a way the OS recognizes and can work with. That is very nice, but now we have to choose. Which one is best?

On the Proxmox wiki, the team describes best practices for Windows VM's. That's great, but they don't really explain why these are best practices. What does Write back do? How does it work?

For your virtual hard disk select "SCSI" as bus with "VirtIO SCSI" as controller. Set "Write back" as cache option for best performance (the "No cache" default is safer, but slower) and tick "Discard" to optimally use disk space (TRIM).

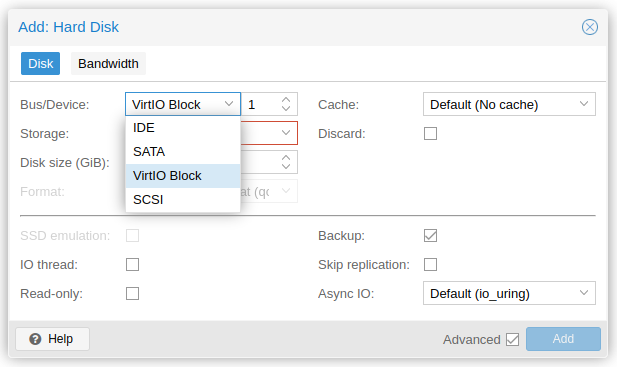

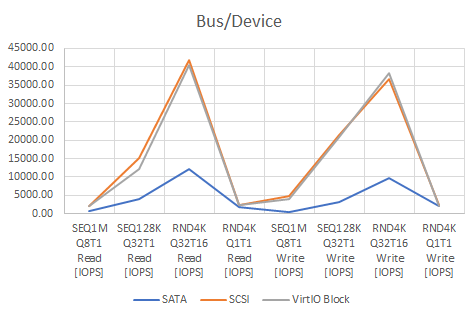

Bus/Device type

Proxmox, through Qemu, can emulate 4 types of data busses:

IDESATASCSI(often called VirtIO SCSI in the forums)VirtIO(often called VirtIO block in the forums)

In general using IDE or SATA is discouraged as they are relatively slow. In their best practice guides, Proxmox recommends to use VirtIO SCSI, that is the SCSI bus, connected to the VirtIO SCSI controller (selected by default on latest Proxmox version). This is assuming you run Proxmox on a server with a fast disk array, more on that later.

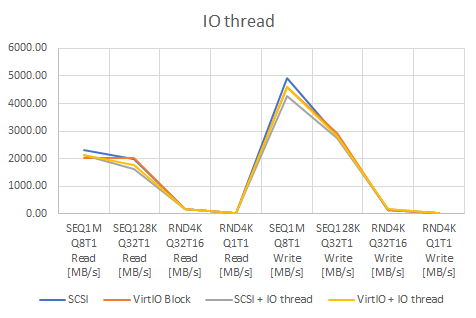

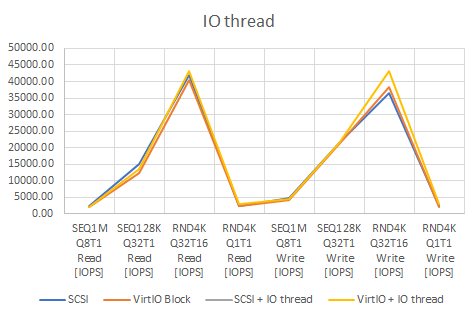

IO thread

Each virtual disk you create is connected to a virtual controller. For best performance each controller only has a single disk attached. The IO thread option "creates one I/O thread per storage controller, rather than a single thread for all I/O. This can increase performance when multiple disks are used and each disk has its own storage controller."

In the case of this benchmark it shouldn't matter as we only benchmark one disk at a time and all disks are connected to the same controller.

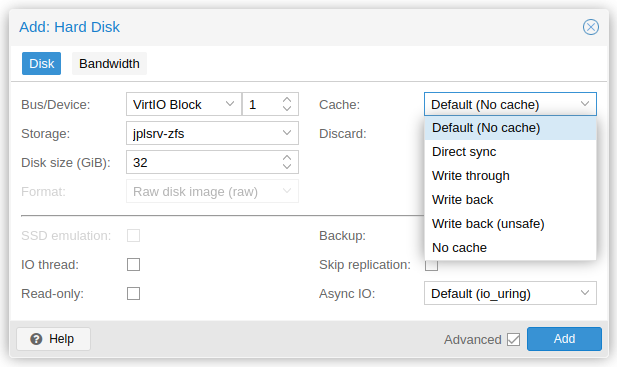

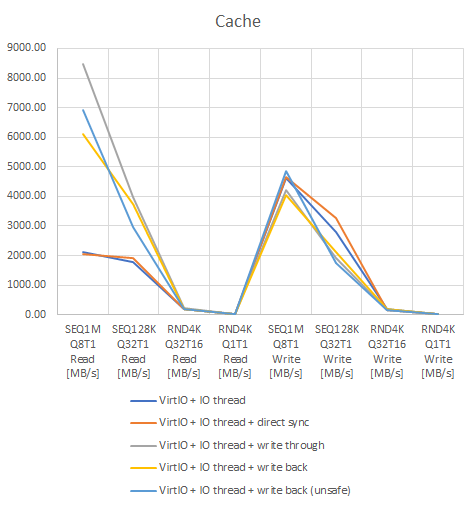

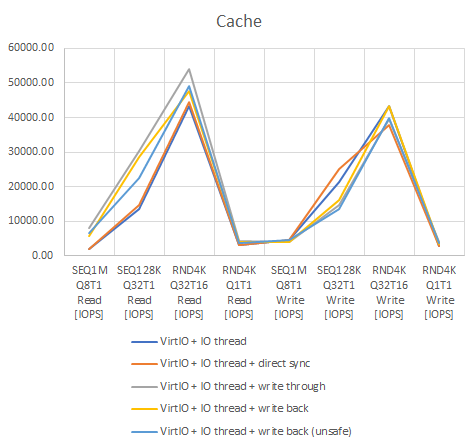

Cache

Proxmox also offers 5 cache modes:

Direct syncWrite throughWrite backWrite back (unsafe)No cache

All have their specific use cases which I cannot comment on. My knowledge is too limited to provide any examples or guidance. In this post I will only show the hard data, no actual recommendation. So before you change this, make sure you understand the implications, a power outage can corrupt your data if you select the "wrong" cache method for your hardware.

For the Write back modes, risk can be reduced (or even mitigated?) by using a UPS and/or BBU backed controllers for hardware RAID. Anyway, for more in depth information, check the Proxmox wiki: Performance Tweaks.

Other settings

For this write-up we leave the other settings unchanged. Discard is useful to save disk space, not for performance directly. Async IO would be interesting, but so far I haven't touched it and I haven't found any clear documentation on it.

Testing on Windows

Now, let's start testing. In my specific case I was interested in the performance gains when using SCSI or VirtIO vs IDE or SATA in Windows. In Linux the former are supported out of the box, so why not use them?

However, to use SCSI and VirtIO in Windows you have to install the drivers manually during Windows installation or add them manually later which is rather cumbersome (see "Setup On Running Windows") as it is not just switching a setting. Is it worth all this hassle? (spoiler: yes it is).

I'm running VM's with Windows 10, 11 and server 2019. I installed the drivers during installation or after, so I can confirm it all works as described. For this specific benchmark a VM with Windows 11 was used. The VM had 8 GB memory, 8 cores and was based on the pc-i440fx-6.2 machine (I cannot boot it with q35, which is the opposite of https://forum.proxmox.com/threads/windows-11-guest-has-not-initialized-the-display-yet.98206/).

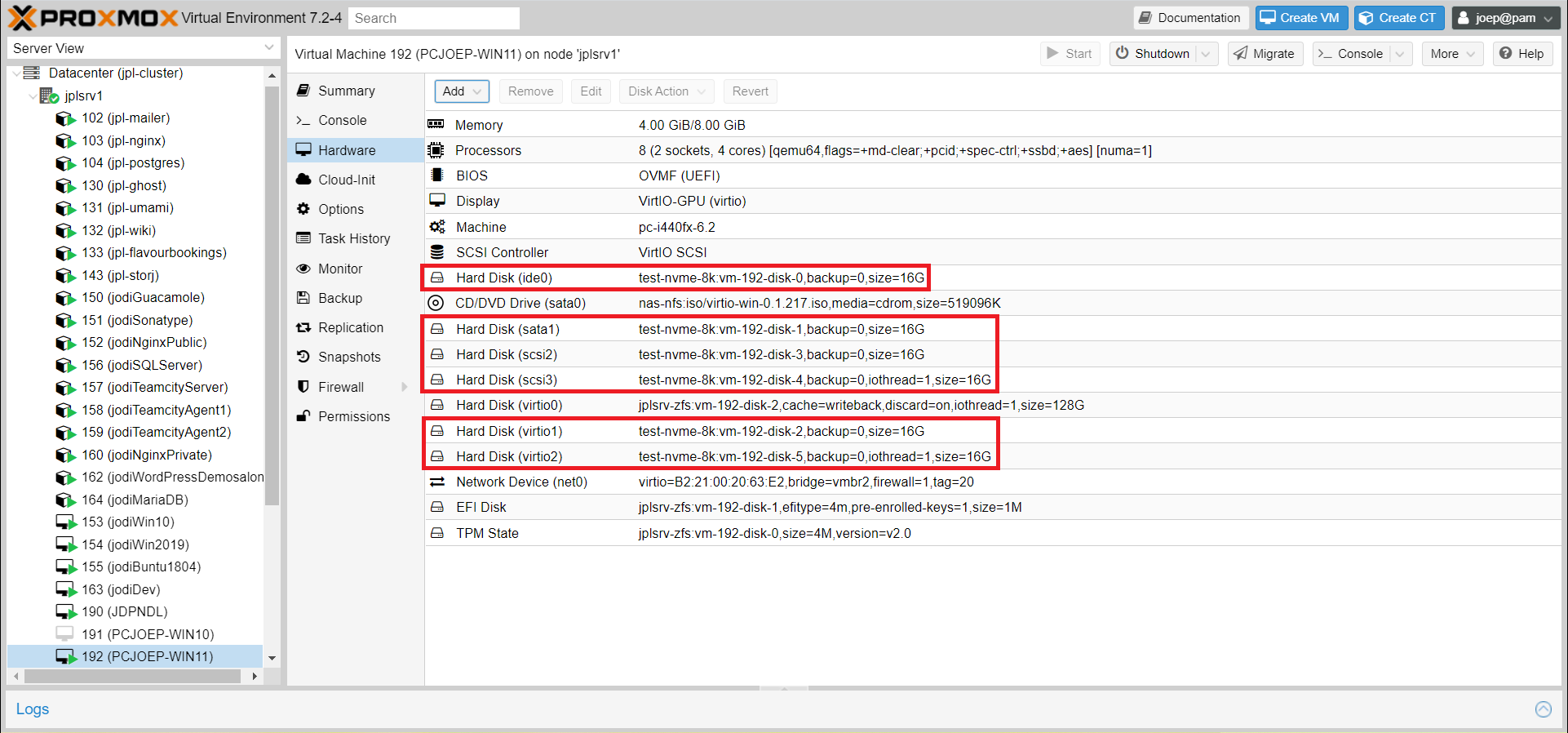

Adding the disks

I had two unused NVMe SSD's (Kingston A2000 1TB) which I installed with two PCI-e brackets in my server. With these drives I created a mirror ZFS pool test-nvme with default settings (Block Size was left at the default of 8k). In the VM window I created 16 GB disks on test-nvme with different busses and settings. I then attached them all to the VM.

After starting Windows, all drives were initialized and formatted with NTFS file system with 4k Allocation unit size (I don't know why I did that). Each disk was then put through CrystalMark with the NVMe profile selected.

IDE disk. I tried re-adding it multiple times, but gave up. So unfortunately it is not in the results below.Results

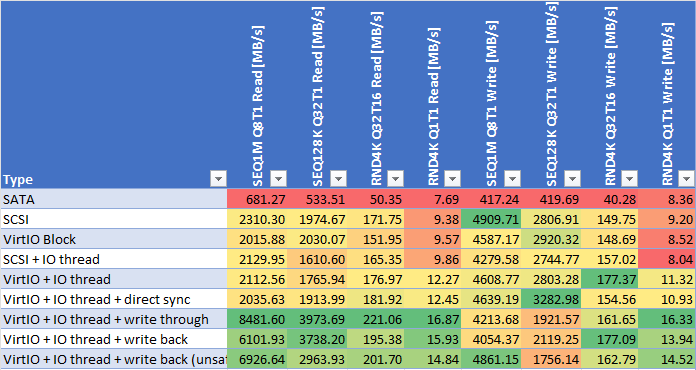

Throughput

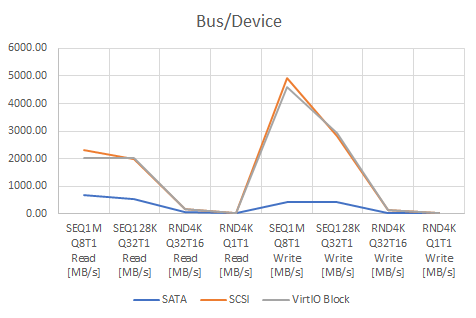

Bus type: The raw numbers confirm that SATA is indeed slow compared to SCSI and VirtIO. In some cases up to a factor of 10! Seeing this I left it out of the cache benchmarks.

IO thread: It doesn't really seem to do much. I'd say the differences are within the variance/error range of the measurements.

Cache setting: This definitely makes a huge difference. Read speeds go up by a factor 3-4. Sequential write speeds suffer, though random write speeds improve.

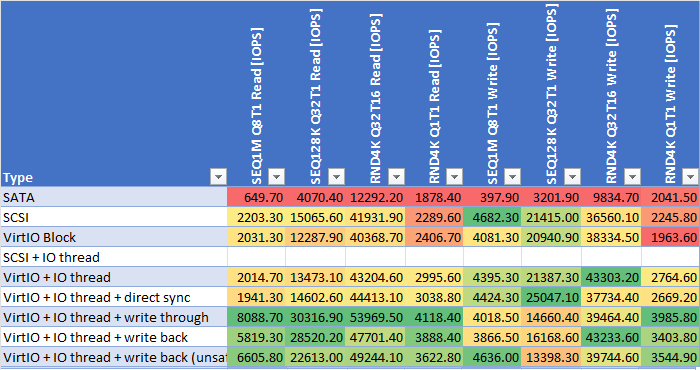

IOPS

Bus type: Again the raw numbers confirm that SATA is indeed slow compared to SCSI and VirtIO. In some cases it's orders of magnitude.

IO thread: Again it's hard to see the improvements. Which was to be expected as we only use one disk at a time.

Cache setting: Caching makes a huge difference. Read IOPS go up by a factor 1.2-4. Sequential write IOPS suffer, though random write IOPS improve.

Conclusion

Best bus type

Use either SCSI or VirtIO. The differences are negligible, but both are far superior to SATA. Proxmox advices to use SCSI as it is newer and better maintained.

IO thread yes or no

In these basic benchmarks it makes no difference. This might be the setup, or the specific use case.

Cache setting

After writing this post I think I should have proceeded with SCSI as the bus type as it is the newer, better maintained option. However, so far all my VM's use VirtIO block devices so I used that without actually thinking about it. It shouldn't matter too much though as they currently (2022) perform very similar.

Caching wise it is not that straight forward for me to select a winner. If reading was the main thing this disk was meant to do then Write through is the fastest option. When sequential writing is what's needed most (storing large files, for example when recording or editing movies), either Direct sync or No cache are best. But if random write IOPS are more important, No cache or Write back are the way to go.

Overall

So what am I going to do? I will change my bus types to SCSI and keep Write back as cache method. Until I learn more on IO thread I just leave it on. It doesn't seem to hurt performance.